Robot.txt file acts as an instruction manual for web crawlers while visiting your site or blog. It instructs the bots /crawlers/spiders where they can go on your website.

With this file, you are asking bots- what they are allowed to see on your site and what is off-limits.

Robot.txt is an incredibly powerful tool when it comes to your site SEO. It’s a way to increase your SEO by taking advantage of a natural part of every website that rarely gets talked about.

Here is why.

Search bots have a crawl quota for each website. Meanwhile, they crawl a certain number of pages during a crawl session. If they don’t end up crawling all pages on your site, then they will come back and resume crawl in the next session.

This can slow down your website indexing rate.

As a solution, you can disallow search bots from attempting to crawl discretionary pages. Doing this, you will save your crawl quota and also helps search engines crawl even more pages on your site and index them as quickly as possible.

The syntax for a robots.txt file:

User-agent: [user-agent name]

Disallow: [URL string not to be crawled]

User-agent: [user-agent name]

Allow: [URL string to be crawled]

Sitemap: [URL of your XML Sitemap]This is the basic format of the Robot.txt file. You can add multiple instructions to allow or disallow specific URLs/Directories. Similarly, you can add multiple sitemaps. If you don’t input any URL to disallow, then search engine bot can crawl any URL (as mentioned above).

For example:

User-Agent: *

Allow: /wp-content/uploads/

Disallow: /wp-content/plugins/

Disallow: /wp-admin/

Sitemap: http://www.example.com/post-sitemap.xml

Sitemap: http://www.example.com/page-sitemap.xmlIn the above example, I’ve disallowed search bots to crawl WordPress plugin files, WordPress admin area.

So, this is an ideal look of the Robot.txt file. Now, let’s see the way to create Robot.txt file for your site.

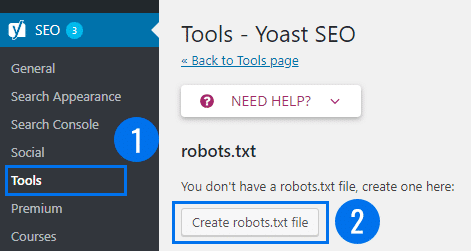

For this task, I strongly recommend using Yoast SEO plugin to create and edit it directly from your WordPress admin area. Yoast SEO automatically creates a Robot.txt file for you.

Install Yoast SEO and navigate to Tools page under the SEO menu. Here you can see the robots.txt file of your website/blog. If you don’t have a robots.txt file, create one by hitting Create robots.txt file button.

If you don’t see an option for file editor, it is because you have hardened WordPress security. You can enable the file editing option by removing the following line from the wp-config.php file.

define('DISALLOW_FILE_EDIT', true);Your existing Robot.txt file will appear as:

User-agent: *

Disallow: /You have to delete the text as it blocks all search engines. Once done with deleting, you can edit the file as I noted above.

Now test the file using a robots.txt tester tool. The best tool you have- Google Search Console.

Visit your Google search console >> launch the robots.txt tester tool located under the ‘Crawl’ menu.

It will automatically fetch your Robot.txt file for your website and highlight the errors and warnings if it found any.

Do not block CSS or JS folders as a bot can view your site as a real user and if your pages need the JS and CSS to function properly, they should not be blocked.

Conclusion:

The basic aim of optimizing your Robot.txt file is to hide publicly unavailable pages from search engine bots. Through this guide, I hope- you don’t have to invest too much time configuring or testing your robots.txt.

Comment your reviews in the comment section below.